The PM20 commodities/wares archive: part 4 of the data donation to Wikidata

2022-12-23 by Joachim Neubert

After the digitized material of the persons, countries/subjects and companies archives of the 20th Century Press archives had been made available via Wikidata, now the last part from the wares archive has been added.

This ware archive is about products and commodities. Founded in 1908 at the Hamburg "Kolonialinstitut" (colonial institute) as part of the larger press archives, it was maintained by the Hamburg Institute of International Economics (HWWA) until 1998. Now it is part of the cultural heritage, which ZBW has decided to make freely available to the largest possible extend, as part of its Open Access and Open Science policy. While the digitized pages are provided reliably under stable URIs on https://pm20.zbw.eu, the metadata has been donated to Wikidata.

For each ware (e.g., coal), there was a folder - or a series of folders - about the ware or commodity in general, its cultivation, extraction or production, trade, industry, and utilization. For each country, for which this ware was important, separate folders were created. For some important wares, such as coal, that amounted to thousands of documents in the general section, as well as for traditional production countries like the UK, but also more ephemeral deposits like the Philippines. In total, almost 37,000 press articles about coal production and consumption in the first half of the last century are accessible online.

The coverage of the archives (overview) extends to quite special sectors, such as amber or cotton machines. Sadly, only a small part of the commodities/wares archives is freely available on the web. The labour-intensive preparation of the folders was inevitable due to intellectual property law, but could be only achieved for one ninth of the documents. The rest of this material up to 1946, and another time slice with 600,000 pages until 1960, can be accessed as digitized microfilms on the ZBW premises (film overview 1908-1946, 1947-1960, systematic structure). Additionally, 15,000 microfiches cover the full time range of the archives until 1998.

Integration of the metadata into Wikidata

For the country category structure of the archive we used, as in the countries/subjects archive, existing Wikidata items. Most of the commodities and wares categories were also already present as items, and we matched and linked them via OpenRefine. Only a handfull of special, artifical categories (like Axe, hatchet, hammer) had to be created.

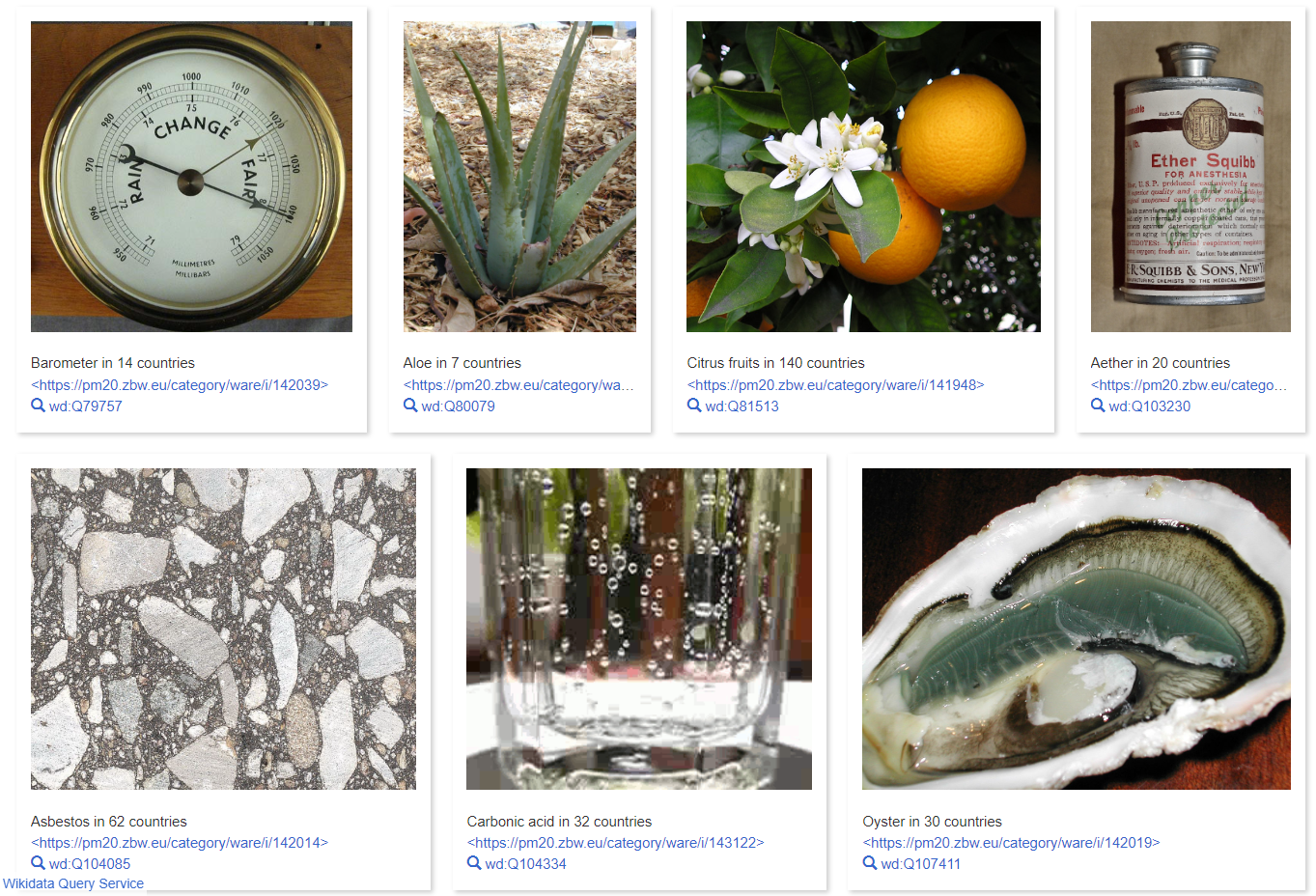

We then, for each folder of the archive, built an item in Wikidata, defined by a commodity/ware and a country category, and linking to the according folder in the press archives (e.g., Coal : United States of America). For the general, non-country specific folders, the commodities/ware category was combined with the item for "world", as in Banana : World. The diversity of the archive's topics in Wikidata shows up

in a colorful picture (live query), providing an entry point into the archive.

In total, 2891 items representing PM20 ware/category folders were created. As this last archive, the integration of the 20th Century Press Archives' metadata into Wikidata is completed. Every folder of the archives is represented in Wikidata and links to digitized press clippings and other material about its topic. How these Wikidata items can be used in queries and applications will be the subject of another ZBW labs blog entry.