Google's AudioOverview feature: a game changer in (scientific) communication?

2024-10-14 by Timo Borst

2024-10-14 by Timo Borst

2024-01-30 by Joachim Neubert

2022-12-23 by Joachim Neubert

After the digitized material of the persons, countries/subjects and companies archives of the 20th Century Press archives had been made available via Wikidata, now the last part from the wares archive has been added.

This ware archive is about products and commodities. Founded in 1908 at the Hamburg "Kolonialinstitut" (colonial institute) as part of the larger press archives, it was maintained by the Hamburg Institute of International Economics (HWWA) until 1998. Now it is part of the cultural heritage, which ZBW has decided to make freely available to the largest possible extend, as part of its Open Access and Open Science policy. While the digitized pages are provided reliably under stable URIs on https://pm20.zbw.eu, the metadata has been donated to Wikidata.

For each ware (e.g., coal), there was a folder - or a series of folders - about the ware or commodity in general, its cultivation, extraction or production, trade, industry, and utilization. For each country, for which this ware was important, separate folders were created. For some important wares, such as coal, that amounted to thousands of documents in the general section, as well as for traditional production countries like the UK, but also more ephemeral deposits like the Philippines. In total, almost 37,000 press articles about coal production and consumption in the first half of the last century are accessible online.

The coverage of the archives (overview) extends to quite special sectors, such as amber or cotton machines. Sadly, only a small part of the commodities/wares archives is freely available on the web. The labour-intensive preparation of the folders was inevitable due to intellectual property law, but could be only achieved for one ninth of the documents. The rest of this material up to 1946, and another time slice with 600,000 pages until 1960, can be accessed as digitized microfilms on the ZBW premises (film overview 1908-1946, 1947-1960, systematic structure). Additionally, 15,000 microfiches cover the full time range of the archives until 1998.

Integration of the metadata into Wikidata

For the country category structure of the archive we used, as in the countries/subjects archive, existing Wikidata items. Most of the commodities and wares categories were also already present as items, and we matched and linked them via OpenRefine. Only a handfull of special, artifical categories (like Axe, hatchet, hammer) had to be created.

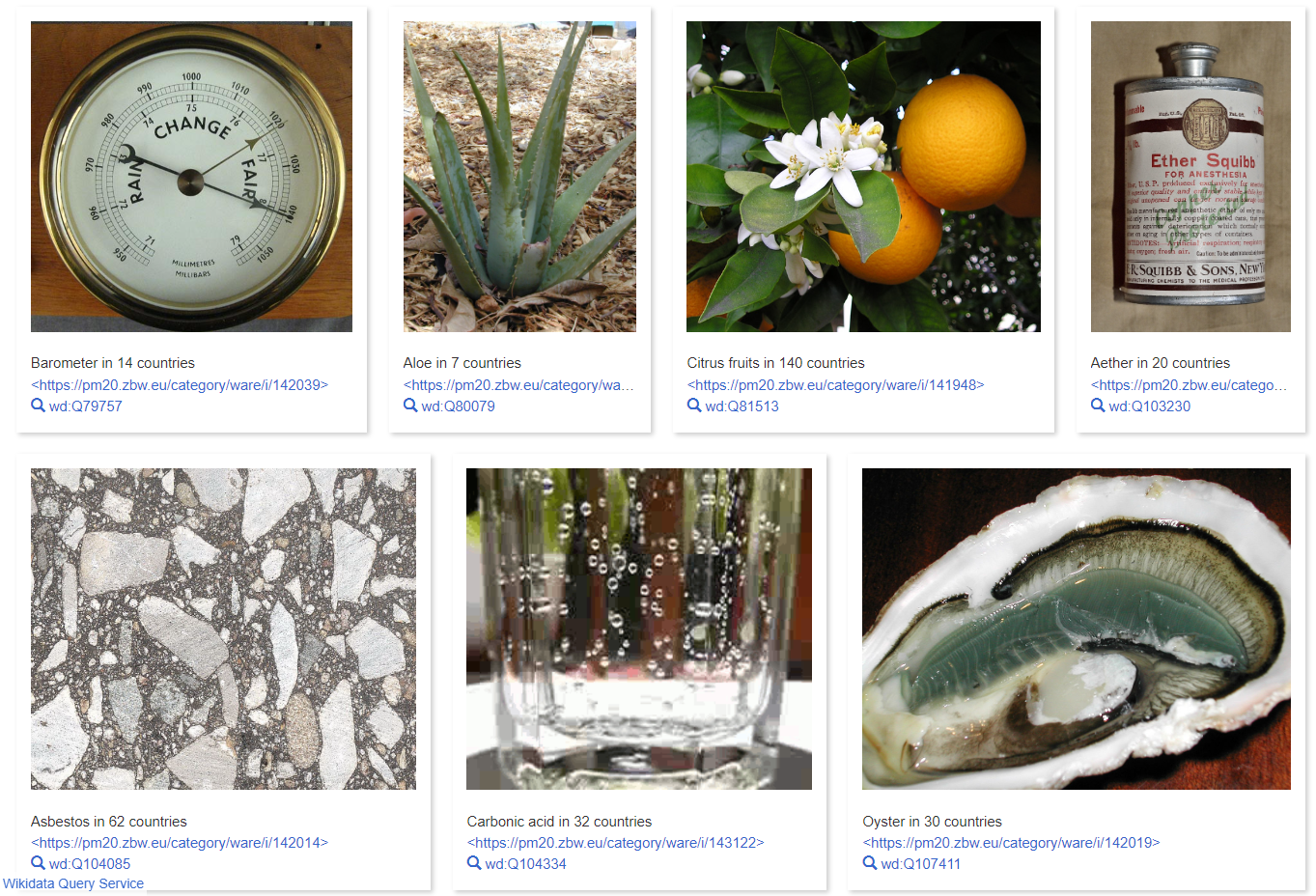

We then, for each folder of the archive, built an item in Wikidata, defined by a commodity/ware and a country category, and linking to the according folder in the press archives (e.g., Coal : United States of America). For the general, non-country specific folders, the commodities/ware category was combined with the item for "world", as in Banana : World. The diversity of the archive's topics in Wikidata shows up

in a colorful picture (live query), providing an entry point into the archive.

In total, 2891 items representing PM20 ware/category folders were created. As this last archive, the integration of the 20th Century Press Archives' metadata into Wikidata is completed. Every folder of the archives is represented in Wikidata and links to digitized press clippings and other material about its topic. How these Wikidata items can be used in queries and applications will be the subject of another ZBW labs blog entry.

2022-02-02 by Joachim Neubert

Currently, the STW Thesaurus for Economics is mapped to Wikidata, one sub-thesaurus at a time. For the next part, "B Business Economics", we have improved our prior OpenRefine matching process. Though the use case - matching concepts in a multilingual thesaurus with lots of synonyms - shouldn't be uncommon, we couldn't find guidelines on the web and provide a description of what works for us here.

OpenRefine has the non-obvious capability to run multiple reconciliations on different columns, and to combine selected matched items from these columns. It is possible to use different endpoints for the reconciliation, in our case https://wikidata.reconci.link/en/api for English and https://wikidata.reconci.link/de/api for German Wikidata labels.

2021-12-13 by Joachim Neubert

ZBW inherited a large trove of historical company information - annual reports, newspaper clippings and other material about more than 40,000 companies and other organizations around the world. Parts of these, in particular all about German und British entities until 1949, are available free and online in the companies section (list by country) of the 20th Century Press Archives. More digitized folders with material about companies in and outside of Europe up to 1960 are accessible only on ZBW premises, due to intellectual property rights.

As a part of its support for Open Science, ZBW has made all metadata of the 20th Century Press Archives available under a CC0 license. In order to make the folders more easily accessible for business history research as well as for the general public, we have added links for every single folder to Wikidata. In addition to that, the metadata about companies and organizations, such as inception date or links to board members, has been added to the large amount of company data already available in Wikidata. This continues the PM20 data donation of ZBW to Wikidata, as described earlier for the persons archives and the countries/subjects archives. The activities were carried out - with notable help of volunteers - and documented in the WikiProject 20th Century Press Archives.

The mapping process to Wikidata items

Many of the PM20 company and organization folders deal with existing items in Wikidata. If GND identifiers were assigned to these items, we directly created links to PM20 companies with the same id, and were done. Matching and linking to Wikidata items without the help of a unique identifier however provided some challenge. Different from person names, company names change frequently, or are spelled differently in different times or languages. Not too uncommon, the entities themselves change through mergers and acquisitions, and may or may not have been represented by a new folder in PM20, or by a different item in Wikidata. Subsidiaries may be subsumed under the parent organization, or be separate entities. While it is relatively easy to split items in Wikidata, in the folders with printed newspaper clippings and reports it meant digging through sometimes hundreds of pages to single out a company retrospectively. So early decisons about the cutting and delimitation of folders often stuck for the following decades. All of that made it more difficult not only to obtain matches at all, but also to decide if indeed the same entity is covered.

2021-01-29 by Joachim Neubert

The world's largest public newspaper clippings archive comprises lots of material of great interest particularly for authors and readers in the Wikiverse. ZBW has digitized the material from the first half of the last century, and has put all available metadata under a CC0 license. More so, we are donating that data to Wikidata, by adding or enhancing items and providing ways to access the dossiers (called "folders") and clippings easily from there.

Challenges of modelling a complex faceted classification in Wikidata

That had been done for the persons' archive in 2019 - see our prior blog post. For persons, we could just link from existing or a few newly created person items to the biographical folders of the archive. The countries/subjects archives provided a different challenge: The folders there were organized by countries (or continents, or cities in a few cases, or other geopolitical categories), and within the country, by an extended subject category system (available also as SKOS). To put it differently: Each folder was defined by a geo and a subject facet - a method widely used in general purpose press archives, because it allowed a comprehensible and, supported by a signature system, unambiguous sequential shelf order, indispensable for quick access to the printed material.

2020-12-07 by Joachim Neubert

Here we describe the process of building the interactive SWIB20 participants map, created by a query to Wikidata. The map was intended to support participants of SWIB20 to make contacts in the virtual conference space. However, in compliance with GDPR we want to avoid publishing personal details. So we choose to publish a map of institutions, to which the participants are affiliated. (Obvious downside: the 9 un-affiliated participants could not be represented on the map).

We suppose that the method can be applied to other conferences and other use cases - e.g., the downloaders of scientific software or the institutions subscribed to an academic journal. Therefore, we describe the process in some detail.

2020-11-16 by Timo Borst

by Franz Osorio, Timo Borst

Against this background, we took the opportunity to collect, process and display some impact or signal data with respect to literature in economics from different sources, such as 'traditional' citation databases, journal rankings and community platforms resp. altmetrics indicators:

William W. Hood, Concepcion S. Wilson. The Literature of Bibliometrics, Scientometrics, and Informetrics. Scientometrics 52, 291–314 Springer Science and Business Media LLC, 2001. Link

R. Schimmer. Disrupting the subscription journals’ business model for the necessary large-scale transformation to open access. (2015). Link

Mike Thelwall, Stefanie Haustein, Vincent Larivière, Cassidy R. Sugimoto. Do Altmetrics Work? Twitter and Ten Other Social Web Services. PLoS ONE 8, e64841 Public Library of Science (PLoS), 2013. Link

The ZBW - Open Science Future. Link

Sarah Anne Murphy. Data Visualization and Rapid Analytics: Applying Tableau Desktop to Support Library Decision-Making. Journal of Web Librarianship 7, 465–476 Informa UK Limited, 2013. Link

Christina Kläre, Timo Borst. Statistic packages and their use in research in Economics | EDaWaX - Blog of the project ’European Data Watch Extended’. EDaWaX - European Data Watch Extended (2017). Link

2019-11-21 by Wolfgang Riese

Back in 2015 the ZBW Leibniz Information Center for Economics (ZBW) teamed up with the Göttingen State and university library (SUB), the Service Center of Götting library federation (VZG) and GESIS Leibniz Institute for the Social Sciences in the *metrics project funded by the German Research Foundation (DFG). The aim of the project was: “… to develop a deeper understanding of *metrics, especially in terms of their general significance and their perception amongst stakeholders.” (*metrics project about).

In the practical part of the project the following DSpace based repositories of the project partners participated as data sources for online publications and – in the case of EconStor – also as implementer for the presentation of the social media signals:

In the work package “Technology analysis for the collection and provision of *metrics” of the project an analysis of currently available *metrics technologies and services had been performed.

As stated by [Wilsdon 2017], currently suppliers of altmetrics “remain too narrow (mainly considering research products with DOIs)”, which leads to problems to acquire *metrics data for repositories like EconStor with working papers as the main content. As up to now it is unusual – at least in the social sciences and economics – to create DOIs for this kind of documents. Only the resulting final article published in a journal will receive a DOI.

Based on the findings in this work package, a test implementation of the *metrics crawler had been built. The crawler had been actively deployed from early 2018 to spring 2019 at the VZG. For the aggregation of the *metrics data the crawler had been fed with persistent identifiers and metadata from the aforementioned repositories.

At this stage of the project, the project partners still had the expectation, that the persistent identifiers (e.g. handle, URNs, …), or their local URL counterparts, as used by the repositories could be harnessed to easily identify social media mentions of their documents, e.g. for EconStor:

2019-10-24 by Joachim Neubert

ZBW is donating a large open dataset from the 20th Century Press Archives to Wikidata, in order to make it better accessible to various scientific disciplines such as contemporary, economic and business history, media and information science, to journalists, teachers, students, and the general public.

The 20th Century Press Archives (PM20) is a large public newspaper clippings archive, extracted from more than 1500 different sources published in Germany and all over the world, covering roughly a full century (1908-2005). The clippings are organized in thematic folders about persons, companies and institutions, general subjects, and wares. During a project originally funded by the German Research Foundation (DFG), the material up to 1960 has been digitized. 25,000 folders with more than two million pages up to 1949 are freely accessible online. The fine-grained thematic access and the public nature of the archives makes it to our best knowledge unique across the world (more information on Wikipedia) and an essential research data fund for some of the disciplines mentioned above.

The data donation does not only mean that ZBW has assigned a CC0 license to all PM20 metadata, which makes it compatible with Wikidata. (Due to intellectual property rights, only the metadata can be licensed by ZBW - all legal rights on the press articles themselves remain with their original creators.) The donation also includes investing a substantial amount of working time (during, as planned, two years) devoted to the integration of this data into Wikidata. Here we want to share our experiences regarding the integration of the persons archive metadata.

2018-10-23 by Joachim Neubert

At 27th and 28th of October, the Kick-off for the "Kultur-Hackathon" Coding da Vinci is held in Mainz, Germany, organized this time by GLAM institutions from the Rhein-Main area: "For five weeks, devoted fans of culture and hacking alike will prototype, code and design to make open cultural data come alive." New software applications are enabled by free and open data.

For the first time, ZBW is among the data providers. It contributes the person and company dossiers of the 20th Century Press Archive. For about a hundred years, the predecessor organizations of ZBW in Kiel and Hamburg had collected press clippings, business reports and other material about a wide range of political, economic and social topics, about persons, organizations, wares, events and general subjects. During a project funded by the German Research Organization (DFG), the documents published up to 1948 (about 5,7 million pages) had been digitized and are made publicly accessible with according metadata, until recently solely in the "Pressemappe 20. Jahrhundert" (PM20) web application. Additionally, the dossiers - for example about Mahatma Gandhi or the Hamburg-Bremer Afrika Linie - can be loaded into a web viewer.

As a first step to open up this unique source of data for various communities, ZBW has decided to put the complete PM20 metadata* under a CC-Zero license, which allows free reuse in all contexts. For our Coding da Vinci contribution, we have prepared all person and company dossiers which already contain documents. The dossiers are interlinked among each other. Controlled vocabularies (for, e.g., "country", or "field of activity") provide multi-dimensional access to the data. Most of the persons and a good share of organizations were linked to GND identifiers. As a starter, we had mapped dossiers to Wikidata according to existing GND IDs. That allows to run queries for PM20 dossiers completely on Wikidata, making use of all the good stuff there. An example query shows the birth places of PM20 economists on a map, enriched with images from Wikimedia commons. The initial mapping was much extended by fantastic semi-automatic and manual mapping efforts by the Wikidata community. So currently more than 80 % of the dossiers about - often rather prominent - PM20 persons are linked not only to Wikidata, but also connected to Wikipedia pages. That offers great opportunities for mash-ups to further data sources, and we are looking forward to what the "Coding da Vinci" crowd may make out of these opportunities.

Technically, the data has been converted from an internal intermediate format to still quite experimental RDF and loaded into a SPARQL endpoint. There it was enriched with data from Wikidata and extracted with a construct query. We have decided to transform it to JSON-LD for publication (following practices recommended by our hbz colleagues). So developers can use the data as "plain old JSON", with the plethora of web tools available for this, while linked data enthusiasts can utilize sophisticated Semantic Web tools by applying the provided JSON-LD context. In order to make the dataset discoverable and reusable for future research, we published it persistently at zenodo.org. With it, we provide examples and data documentation. A GitHub repository gives you additional code examples and a way to address issues and suggestions.

* For the scanned documents, the legal regulations apply - ZBW cannot assign licenses here.

2017-11-30 by Joachim Neubert

In the EconBiz portal for publications in economics, we have data from different sources. In some of these sources, most notably ZBW's "ECONIS" bibliographical database, authors are disambiguated by identifiers of the Integrated Authority File (GND) - in total more than 470,000. Data stemming from "Research papers in Economics" (RePEc) contains another identifier: RePEc authors can register themselves in the RePEc Author Service (RAS), and claim their papers. This data is used for various rankings of authors and, indirectly, of institutions in economics, which provides a big incentive for authors - about 50,000 have signed into RAS - to keep both their article claims and personal data up-to-date. While GND is well known and linked to many other authorities, RAS had no links to any other researcher identifier system. Thus, until recently, the author identifiers were disconnected, which precludes the possibility to display all publications of an author on a portal page.

To overcome that limitation, colleagues at ZBW have matched a good 3,000 authors with RAS and GND IDs by their publications (see details here). Making that pre-existing mapping maintainable and extensible however would have meant to set up some custom editing interface, would have required storage and operating resources and wouldn't easily have been made publicly accessible. In a previous article, we described the opportunities offered by Wikidata. Now we made use of it.

2017-03-02 by Joachim Neubert

The Journal of Economic Literature Classification Scheme (JEL) was created and is maintained by the American Economic Association. The AEA provides this widely used resource freely for scholarly purposes. Thanks to André Davids (KU Leuven), who has translated the originally English-only labels of the classification to French, Spanish and German, we provide a multi-lingual version of JEL. It's lastest version (as of 2017-01) is published in the formats RDFa and RDF download files. These formats and translations are provided "as is" and are not authorized by AEA. In order to make changes in JEL tracable more easily, we have created lists of inserted and removed JEL classes in the context of the skos-history project.

2017-01-17 by Joachim Neubert

Wikidata is a large database, which connects all of the roughly 300 Wikipedia projects. Besides interlinking all Wikipedia pages in different languages about a specific item – e.g., a person -, it also connects to more than 1000 different sources of authority information.

The linking is achieved by a „authority control“ class of Wikidata properties. The values of these properties are identifiers, which unambiguously identify the wikidata item in external, web-accessible databases. The property definitions includes an URI pattern (called „formatter URL“). When the identifier value is inserted into the URI pattern, the resulting URI can be used to look up the authoritiy entry. The resulting URI may point to a Linked Data resource - as it is the case with the GND ID property. This, on the one hand, provides a light-weight and robust mechanism to create links in the web of data. On the other hand, these links can be exploited by every application which is driven by one of the authorities to provide additional data: Links to Wikipedia pages in multiple languages, images, life data, nationality and affiliations of the according persons, and much more.

Wikidata item for the Indian Economist Bina Agarwal, visualized via the SQID browser

2016-06-03 by Timo Borst

Authors: Timo Borst, Konstantin Ott

In recent years, repositories for managing research data have emerged, which are supposed to help researchers to upload, describe, distribute and share their data. To promote and foster the distribution of research data in the light of paradigms like Open Science and Open Access, these repositories are normally implemented and hosted as stand-alone applications, meaning that they offer a web interface for manually uploading the data, and a presentation interface for browsing, searching and accessing the data. Sometimes, the first component (interface for uploading the data) is substituted or complemented by a submission interface from another application. E.g., in Dataverse or in CKAN data is submitted from remote third-party applications by means of data deposit APIs [1]. However the upload of data is organized and eventually embedded into a publishing framework (data either as a supplement of a journal article, or as a stand-alone research output subject to review and release as part of a ‘data journal’), it definitely means that this data is supposed to be made publicly available, which is often reflected by policies and guidelines for data deposit.

2016-06-03 by Timo Borst

Authors: Timo Borst, Nils Witt

Since their beginnings, libraries and related cultural institutions were confident in the fact that users had to visit them in order to search, find and access their content. With the emergence and massive use of the World Wide Web and associated tools and technologies, this situation has drastically changed: if those institutions still want their content to be found and used, they must adapt themselves to those environments in which users expect digital content to be available. Against this background, the general approach of the EEXCESS project is to ‘inject’ digital content (both metadata and object files) into users' daily environments like browsers, authoring environments like content management systems or Google Docs, or e-learning environments. Content is not just provided, but recommended by means of an organizational and technical framework of distributed partner recommenders and user profiles. Once a content partner has connected to this framework by establishing an Application Program Interface (API) for constantly responding to the EEXCESS queries, the results will be listed and merged with the results of the other partners. Depending on the software component installed either on a user’s local machine or on an application server, the list of recommendations is displayed in different ways: from a classical, text-oriented list, to a visualization of metadata records.

2016-06-03 by Timo Borst

Author: Arne Martin Klemenz

EconBiz – the search portal for Business Studies and Economics – was launched in 2002 as the Virtual Library for Economics and Business Studies. The project was initially funded by the German Research Foundation (DFG) and is developed by the German National Library of Economics (ZBW) with the support of the EconBiz Advisory Board and cooperation partners. The search portal aims to support research in and teaching of Business Studies and Economics with a central entry point for all kinds of subject-specific information and direct access to full texts [1].

As an addition to the main EconBiz service we provide several beta services as part of the EconBiz Beta sandbox. These service developments cover the outcome of research projects based on large-scale projects like EU Projects as well as small-scale projects e.g. in cooperation with students from Kiel University. Therefore, this beta service sandbox aims to provide a platform for testing new features before they might be integrated to the main service (proof of concept development) on the one hand, and it aims to provide a showcase for relevant project output from related projects on the other hand.

2016-05-17 by Timo Borst

2016-03-30 by Joachim Neubert

The "Integrated Authority File" (Gemeinsame Normdatei, GND) of the German National Library (DNB), the library networks of the German-speaking countries and many other institutions, is a widely recognized and used authority resource. The authority file comprises persons, institutions, locations and other entity types, in particular subject headings. With more than 134,000 concepts, organized in almost 500 subject categories, the subjects part - the former "Schlagwortnormdatei" (SWD) - is huge. That would make it a nice resource to stress-test SKOS tools - when it would be available in SKOS. A seminar at the DNB on requirements for thesauri on the Semantic Web (slides, in German) provided another reason for the experiment described below.

2015-07-27 by Joachim Neubert

“What’s new?” and “What has changed?” are questions users of Knowledge Organization Systems (KOS), such as thesauri or classifications, ask when a new version is published. Much more so, when a thesaurus existing since the 1990s has been completely revised, subject area for subject area. After four intermediately published versions in as many consecutive years, ZBW's STW Thesaurus for Economics has been re-launched recently in version 9.0. In total, 777 descriptors have been added; 1,052 (of about 6,000) have been deprecated and in their vast majority merged into others. More subtle changes include modified preferred labels, or merges and splits of existing concepts.

Since STW has been published on the web in 2009, we went to great lengths to make change traceable: No concept and no web page has been deleted, everything from prior versions is still available. Following a presentation at DC-2013 in Lisbon, I've started the skos-history project, which aims to exploit published SKOS files of different versions for change tracking. A first beta implementation of Linked-Data-based change reports went live with STW 8.14, making use of SPARQL "live queries" (as described in a prior post). With the publication of STW 9.0, full reports of the changes are available. How do they work?

2014-11-14 by Joachim Neubert

SPARQL queries are a great way to explore Linked Data sets - be it our STW with it's links to other vocabularies, the papers of our repository EconStor, or persons or institutions in economics as authority data. ZBW therefore offers since a long time public endpoints. Yet, it is often not so easy to figure out the right queries. The classes and properties used in the data sets are unknown, and the overall structure requires some exploration. Therefore, we have started collecting queries in our new SPARQL Lab, which are in use at ZBW, and which could serve as examples to deal with our datasets for others.

A major challenge was to publish queries in a way that allows not only their execution, but also their modification by users. The first approach to this was pre-filled HTML forms (e.g. http://zbw.eu/beta/sparql/stw.html). Yet that couples the query code with that of the HTML page, and with a hard-coded endpoint address. It does not scale to multiple queries on a diversity of endpoints, and it is difficult to test and to keep in sync with changes in the data sets. Besides, offering a simple text area without any editing support makes it quite hard for users to adapt a query to their needs.

And then came YASGUI, an "IDE" for SPARQL queries. Accompanied by the YASQE and YASR libraries, it offers a completely client-side, customable, Javascript-based editing and execution environment. Particular highlights from the libraries' descriptions include:

2014-09-23 by Joachim Neubert

Large library collections, and more so portals or discovery systems aggregating data from diverse sources, face the problem of duplicate content. Wouldn't it be nice, if every edition of a work could be collected beyond one entry in a result set?

The WorldCat catalogue, provided by OCLC, holds more than 320 million bibliographic records. Since early in 2014, OCLC shares its 197 million work descriptions as Linked Open Data: "A Work is a high-level description of a resource, containing information such as author, name, descriptions, subjects etc., common to all editions of the work. ... In the case of a WorldCat Work description, it also contains [Linked Data] links to individual, oclc numbered, editions already shared in WorldCat." The works and editions are marked up with schema.org semantic markup, in particular using schema:exampleOfWork/schema:workExample for the relation from edition to work and vice versa. These properties have been added recently to the schema.org spec, as suggested by the W3C Schema Bib Extend Community Group.

ZBW contributes to WorldCat, and has 1.2 million oclc numbers attached to it's bibliographic records. So it seemed interesting, how many of these editions link to works and furthermore to other editions of the very same work.

2014-04-09 by Joachim Neubert

ZBW Labs now uses DBpedia resources as tags/categories for articles and projects. The new Web Taxonomy plugin for DBpedia Drupal module (developed at ZBW) integrates DBpedia labels, stemming from Wikipedia page titles, via a comfortable autocomplete plugin into the authoring process. On the term page (example), further information about a keyword can be obtained by a link to the DBpedia resource. This at the same time connects ZBW Labs to the Linked Open Data Cloud.

The plugin is the first one released for Drupal Web Taxonomy, which makes LOD resources and web services easily available for site builders. Plugins for further taxonomies are to be released within our Economics Taxonomies for Drupal project.

2013-09-25 by Joachim Neubert

From the beginning, our econ-ws (terminology) web services for economics produce tabular output, very much like the results of a SQL query. Not a surprise - they are based on SPARQL, and use the well-defined table-shaped SPARQL 1.1 query results formats in JSON and XML, which can be easily transformed to HTML. But there are services, whose results not really fit this pattern, because they are inherently tree-shaped. This is true especially for the /combined1 and the /mappings service. For the former, see our prior blog post; an example of the latter may be given here: The mappings of the descriptor International trade policy are (in html) shown as:

| concept | prefLabel | relation | targetPrefLabel | targetConcept | target |

|---|---|---|---|---|---|

| <http://zbw.eu/stw/descriptor/10616-4> | "International trade policy" @en | <http://www.w3.org/2004/02/skos/core#exactMatch> | "International trade policies" @en | <http://aims.fao.org/aos/agrovoc/c_31908> | <http://zbw.eu/stw/mapping/agrovoc/target> |

| <http://zbw.eu/stw/descriptor/10616-4> | "International trade policy" @en | <http://www.w3.org/2004/02/skos/core#closeMatch> | "Commercial policy" @en | <http://dbpedia.org/resource/Commercial_policy> | <http://zbw.eu/stw/mapping/dbpedia/target> |

That´s far from perfect - the "concept" and "prefLabel" entries of the source concept(s) of the mappings are identical over multiple rows.

2013-08-07 by Joachim Neubert

How can we get most out of a thesaurus to support user searches? Taking advantage of SKOS thesauri published on the web, their mappings and the latest Semantic Web tools, we can support users both with synonyms (e.g. "accountancy" for "bookkeeping") for their original search terms as well as with suggestions for neighboring concepts.

2013-04-24 by Joachim Neubert

As a laboratory for new, Linked Open Data based publishing technologies, we now develop the ZBW Labs web site as a Semantic Web Application. The pages are enriched with RDFa, making use of Dublin Core, DOAP (Description of a Project) and other vocabularies. The schema.org vocabulary, which is also applied through RDFa, should support search engine visibility.

With this new version we aim at a playground to test new possibilities in electronic publishing and linking data on the web. At the same time, it facilitates editorial contributions from project members about recent developments and allows comments and other forms of participation by web users.

As it is based on Drupal 7, RDFa is "build-in" (in the CMS core) and is easy done by configuration on a field level. Enhancements are made through the RDFx. Schema.org and SPARQL Views modules. A lot of other ready-made components in Drupal (most noteworthy the Views and the new Entity Reference modules) make it easy to provide and interlink the data items on the site. The current version of Zen theme enables the HTML 5 and the use of RDFa 1.1, and permits a responsive design for smartphones and pads.